Intro

This is the backstory to CVE-2024-43488, which disclosed an RCE vulnerability in vscode-arduino.

vscode-arduino was a VSCode extension that added Arduino support. It was developed by Microsoft and had over 2 million installs.

I found and reported this vulnerability 1-2 years ago. The extension was deprecated shortly after reporting.

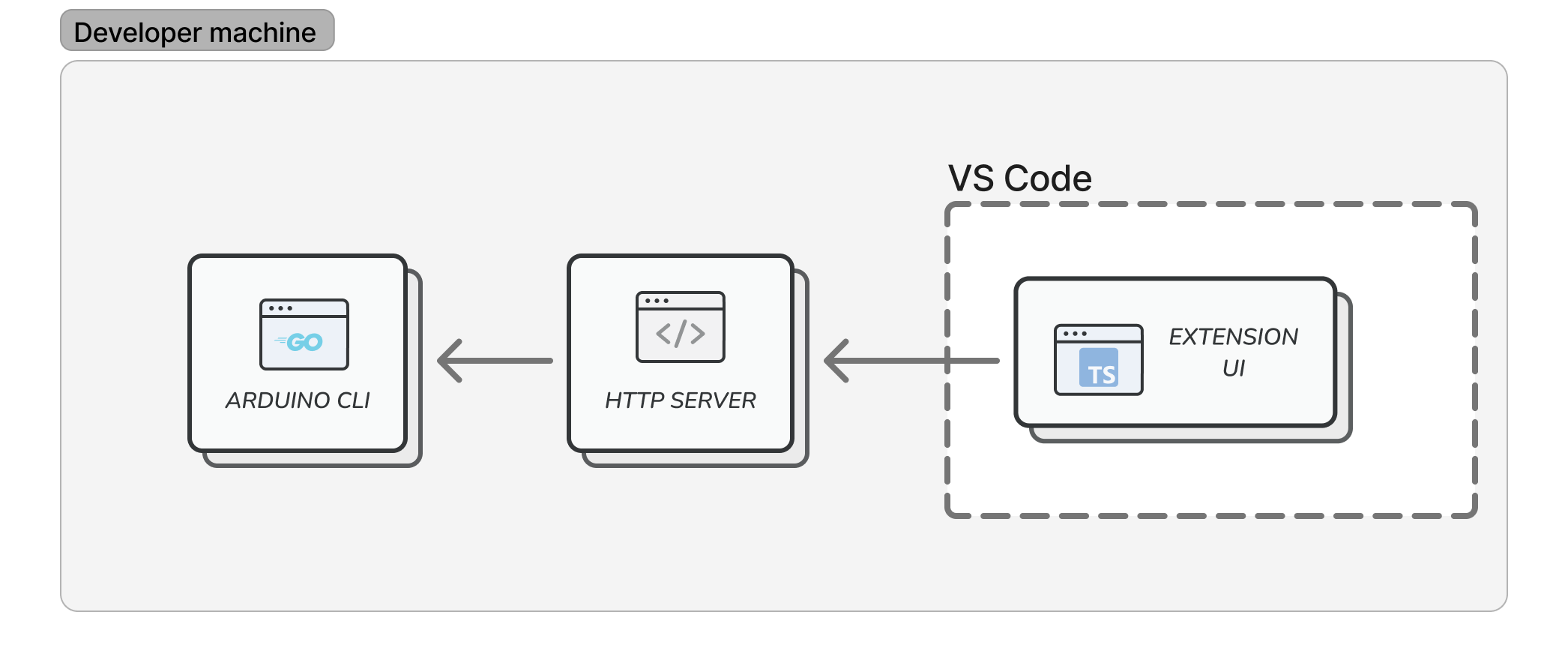

Extension design

Users interact with extension UI, which sends requests to a local web server, which executes Arduino CLI, which handles hardware interaction. All three software components are bundled with the extension.

Vulnerability details

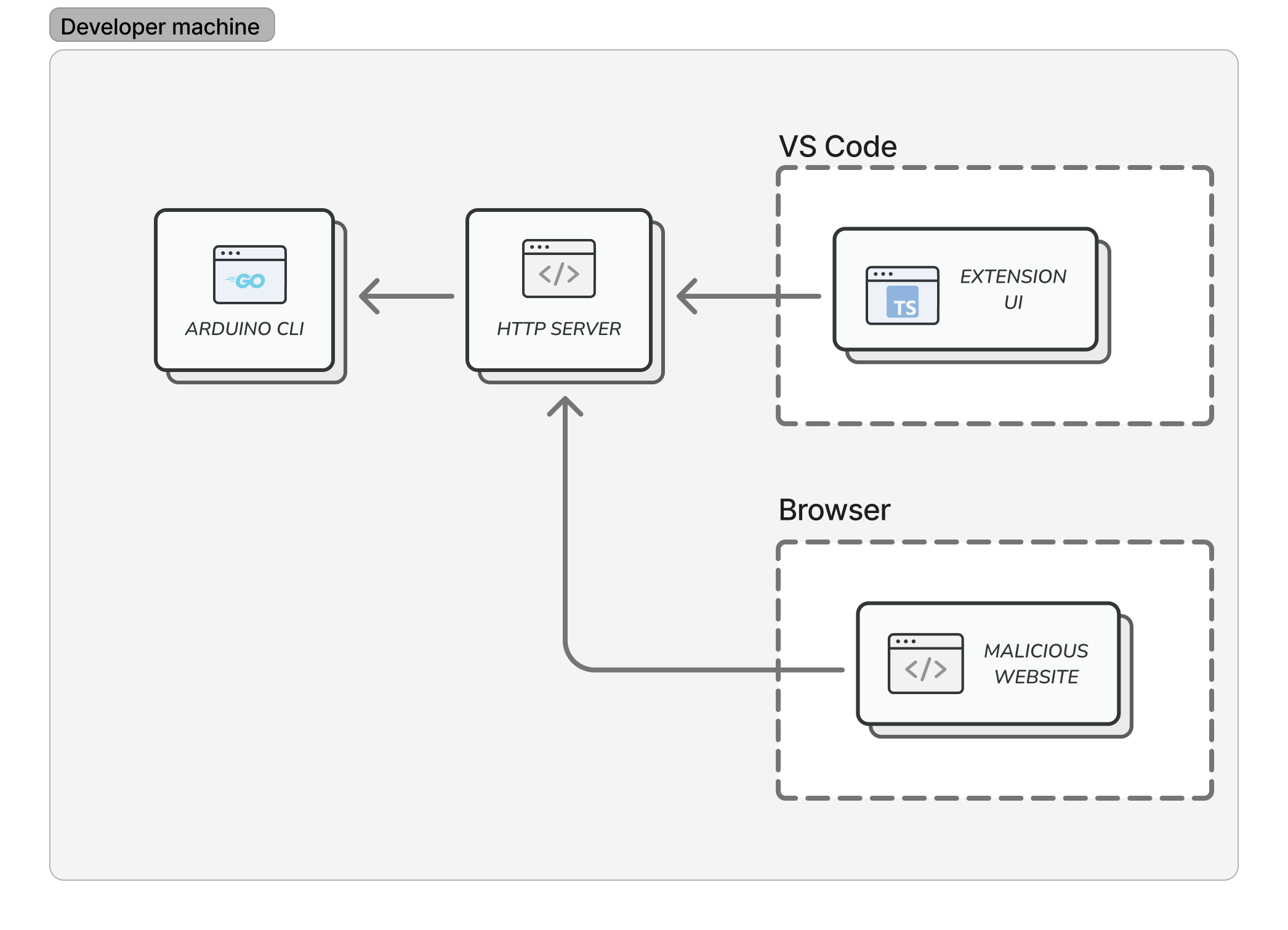

Underpinning the exploit are a few design issues.

- The extension runs an unauthenticated local webserver, which could be accessed by untrusted websites loaded in the user’s browser.

- The webserver exposes access to a CLI tool that was not designed to handle untrusted input.

As a result, remote attackers are exposed to unintended attack surface: bugs and functionality in both Arduino CLI and extension webserver.

The following primitives enable RCE.

File delete

Delete arbitrary files or directories on the filesystem.

1 2 3 4 5 | |

File load

Load an arbitrary file in the IDE, or open a link in a browser.

1 2 3 4 5 | |

File write

Write files to the filesystem. This step is a bit more involved.

First, submit a library to https://github.com/arduino/library-registry. Embed payload files in the library. Libraries that meet requirements are automatically merged into the registry.

Then run the following command, substituting in the library’s name. This places the payload on the target’s computer.

1 2 3 4 5 | |

File execute

Execute any VSCode Command. This includes commands that can run scripts.

1 2 3 4 5 | |

Exploit

User browses to attacker’s website, which uses client-side JS to:

- Port scan for the extension webserver

- Open an iframe for

<attacker domain>:<webserver port> - DNS rebind the iframe to

127.0.0.1- The iframe can send same-origin requests to

<attacker domain>:<webserver port>, which now translates to127.0.0.1:<webserver port>. The iframe’s JS can now access the extension webserver.

- The iframe can send same-origin requests to

- File-write the payload (a Python script) onto the user’s filesystem

- File-load the payload into VSCode

- Execute the payload using the

python.execInTerminalVSCode command - Delete the payload to cover tracks

Steps 1-3 are conveniently done using NCC’s Singularity tool. The remaining are done using the primitives above.

PoC exploit video: https://youtu.be/nM5W67erswc

PoC exploit code: https://github.com/ahmsec/poc-cve-2024-43488 (Contact me for access.)

Mitigations

Here are suggested mitigations for different levels of the ecosystem.

- Extension

- Use

postMessagemessage passing instead of a webserver for communication between UI and CLI components.

- Use

- VSCode

- Sandbox extensions; restrict capabilities such as binding to ports.

- Browsers

- Implement Private Network Access or Local Network Access. These are proposals to restrict public websites from accessing private IP addresses, including handling the DNS rebinding scenario. In their latest release as of writing, Google Chrome made progress implementing this, but generally browsers have yet to fully adopt this.